ISA extension for RISC-V architecture for probabilistic functions

Bayesian neural networks (BNNs) allow us to obtain uncertainty metrics related to the data processed, and the uncertainty generated by the model selected. We have identified how these metrics can be used for several practical applications such as identifying predictions that do not reach the required level of accuracy, or identifying when the predictions are affected by the increase of the level of noise in the input data. However, it is important to consider the increased computational cost associated with BNNs, as their probabilistic nature requires more complex inference methods. These ISA extensions can reduce the need for costly softwarebased approximations and enable more efficient utilization of hardware resources, making Bayesian deep learning more practical for realtime and edge computing applications

BnnRV Toolchain

BnnRV is a toolchain designed to generate

optimized C source code for the inference of BNN

models trained using BayesianTorch. By

translating trained models into C, BnnRV enables

the deployment of uncertaintyaware NNs on

resourceconstrained devices.

A forward pass of a BNN requires sampling the

weight distributions learned during training. These

distributions are Gaussian and need to be generated

using a Gaussian RNG algorithm.

Gaussian sampling remains computationally

expensive, even when using a simple algorithm. To

address this issue, we have proposed an

optimization that replaces Gaussian distributions

with Uniform distributions for inference,

significantly reducing the computational

complexity of weight sampling.

This optimization leverages the Central Limit

Theorem (CLT), assuming that the outputs of BNN

neurons follow a Gaussian distribution, even if the

weight distributions themselves are not Gaussian.

BNN neurons, like those of traditional NNs,

perform MAC operations. These accumulation

operations are the basis for applying the CLT.

The code for BNN inference, incorporating the

Uniform weight optimization, relies on a Uniform

RNG algorithm and two fixed point MAC

operations: the first for weight generation and the

second for the standard weight accumulation used

in NNs. A fixed point MAC operation involves a

bit shift to adjust the scale after the multiplication,

resulting in a total of three instructions per MAC

operation.

Extending RISC-V

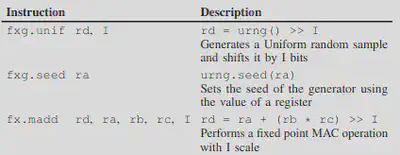

These ISA extensions can reduce the need for costly softwarebased approximations and enable more efficient utilization of hardware resources, making Bayesian deep learning more practical for realtime and edge computing applications.We have enhanced the performance of BNN inference with two new key instructions, a fixed point MAC, and a Uniform RNG. Additionally, a complementary instruction for random seed configuration was included for completeness.

Using this extension, the critical computation of

BNN inference only requires executing three

assembly instructions, which will require only

three cycles in our RISCV core.

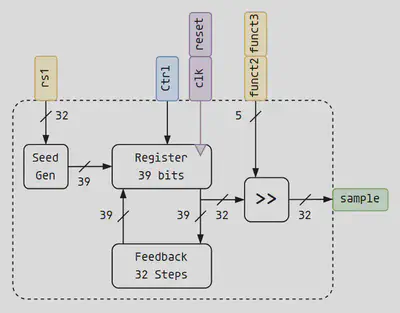

To implement the Uniform RNG functional unit, a

lookahead linear feedback shift register (LFSR)

was used. A LFSR consists of a register and a

feedback network that uses XOR gates to

implement a generating polynomial, producing one

random bit per cycle. However, generating multiple

bits with low correlation requires a more complex

method. To generate 32bit samples, this work

utilizes a 39bit LFSR with a 32step lookahead

mechanism and a shifter to set the scale of the

sample.

To generate 32bit samples, this work utilizes a 39

bit LFSR with a 32step lookahead mechanism

and a shifter to set the scale of the sample. The

fixed point arithmetic unit uses a discrete 32bit

multiplication hardware, a 32bit adder, and a

shifter.

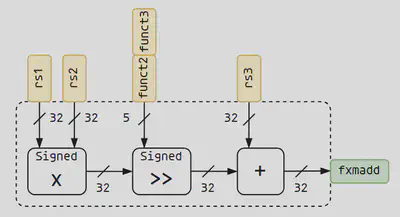

The fixed point arithmetic unit uses a discrete 32

bit multiplication hardware, a 32bit adder, and a

shifter. The fixed point scale represents the number

of bits assigned to the fractional part of a number and

can be stored using 5 bits for 32bit precision. The

fx.madd instruction uses the R4 RISCV encoding.

This encoding provides 5 control bits divided in

two fields, funct2 and funct3. The 5bit size allows

encoding the scale value, used to define the number

of bits assigned to the fractional part of a number,

within those fields as an immediate, within the 32

bits of the instruction format.

Using immediate values means that the code needs

to be recompiled every time the fixed point scales

change, which should not often occur after the

BNN model is deployed.

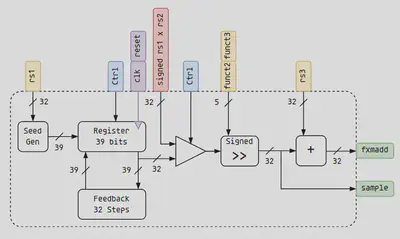

A more optimized implementation combines both functional units and shares the shifter hardware. In addition, instead of using a discrete multiplier, it uses the multiplier hardware already present in the base RISCV core, reducing the area requirements

Experimental results

On average, sampling a Uniform distribution using the software method proposed achieves a speedup of 4.94×, while utilizing the proposed RISCV instructions results in a 8.93× speedup.

The optimized implementation results in an average energy consumption reduction of 87.79%.